Although ISS was built for a very common need, it's apparent complexity at first glance may be misleading. This section explains in a simple yet complete way what needs the ISS addresses and how it achieves its goals.

It's a very common issue to have a mix of lite and heavy weight tasks being executed in a single or several web application(s) running in an applicaton server. These tasks can be executed in an unpredictable sequence and/or load. When a significant number of heavy load long lived operations is being executed in a multi-threaded server at the same time, the response times of the JVM reduce significantly. This reduced response capacity is noticeable in all running processes and new ones that arrive.

The described behaviour is not so uncommon and can't be predicted in the vast majority of situations. When it presents itself, it causes a deficit of performance to all user community. This situation can even result in much more serious situations. As more heavy processes are launched in a multi-threaded server, more resources are allocated. As the server machine runs out of resources it's performance starts to drop exponentially. All processes, slow of fast ones, start having a slower response. This can cause an exponential performance execution curve and end with several processes terminating with errors or even the JVM or some management low level API collapsing under the load.

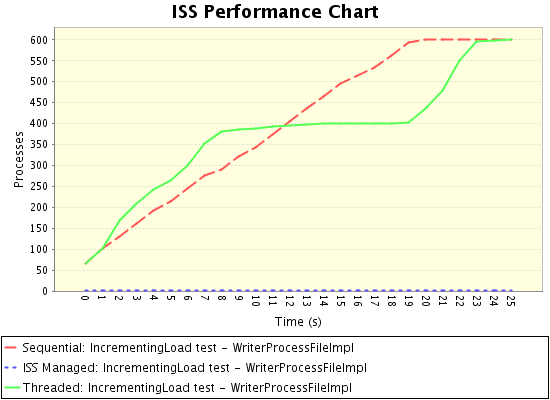

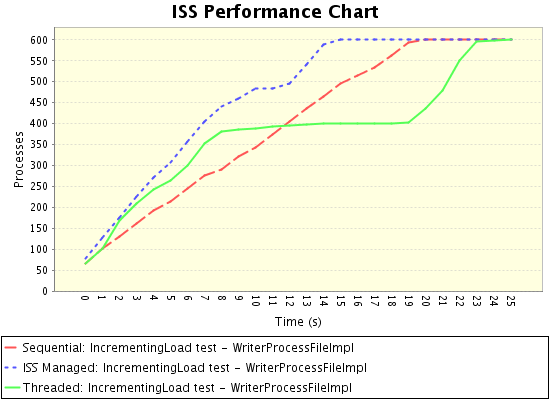

Note: The application used to obtain this chart is writes a given number of lines to a file on disk. Since the disk is concurently accessed we can expect a heavy burden on the disk for larger numbers of lines. All the graphs along the documentation will be produced by the same application. It can be viewd with detail on the Sample Application section.

Two things are for sure:

So what would be the desired behaviour?

The problem seems not to be due to executing all the requests, but rather to execute them all at the same time! So, what if one could queue them so that they do not overload the server? This has of course the downside of a less immediate response... Or doesn't it?

Normally, in a multi-threaded server, it's best to execute several services in parallel mode than in serial mode. This is because a single process normally does not use all of the machine's resources at the fullest, so adding more at the same time sharing the resources would tend do better use the full potential of the server's resources. On the other hand there is a point in the server load curve when the resources are already being used at the fullest and that, adding more parallel processes (threads) starts to do more harm than good to the performance.

Basically, there is a point in witch the sum of sequential execution times becomes less than the execution times of all at the same time. This point however can be nearly impossible to determine. First of all, since multi-tiered applications are composed of so many pieces working together that calculating it can be a very complex task. Second and most of all, since the load on complex systems are normally determined by the sum of a number of possible clients in various possible medias or channels. This load is variable over time. A single hypothetical configuration can give an perfect result on one day/time, and a disastrous result in the next. So trying to calculate this point is like chasing the holly grail... much talked about, but no one can lay their eyes upon it!

But what it one could have a floating point? What if we could have a monitoring process that could determine when the critical point has been achieved and manage the load of the system accordingly. Always monitoring the JVM to see if the optimal point is no longer the same and should be adjusted for best performance results possible?

This is the principle, the goal of ISS! But we'll soon get to that.

The asynchronous sequential approach for process execution assures that there is never a load on the server heavier than the one it can process (unless a design flaw allows a single process to do more than the server is able to). The resources of the JVM and other external systems are all available to the process in an exclusive mode during the time to execute the process. A sequential management of processes to execute is in many cases the best solution. This could be achieved by managing a queue of processes to execute in a FIFO (First-In, First-Out) maner.

But what of the cases when a single process does not use all the available resources? This would lead to a deficit on possible maximum performance since under heavy loads the server's full potential would not be used at the maximum. So a sequential queue of requests would achieve the goal of managing the resources of the server so not to run into more than it could manage, but would not take advantage of the full potential of it.

This can be clearly seen of the performance graph by analyzing the sequential execution line. This is representative of the performance one can expect of a sequential, one-at-a-time, execution of processes.

In a web based world of applications, one get's a very good chance of concurring with hundreds or even thousands of simultaneous requests. One could not even imagine if on a list of thousands of middle/long-lived requests we had to wait this queue in a sequential way... without any kind of feedback! So these kind of execution of requests when managed by ISS will be dealt with in terms of user interaction with an asyncrounous queue of sequential execution.

A request is made and delegated for execution. The user receives an immediate response stating the status of it's request. Hence the asyncrounous behaviour associated with queued requests. This will of course be explained more in detail when we talk about how to use ISS, but this much was necessary to introduce here.

So, executing all at the same time.. is bad... executing one at a time... is not performant! So what is the solution?

As always the best solution comes with a compromise. The perfect performance would come of allowing the multi-threaded power of the server until the point where the usage of the resources would become a problem, and after that, maintain the server's load in that maximum performance point sequentially adding more processes in execution as soon as others were served.

This is the goal, the strategy behind ISS. This is the way how we can expect to use the full potential of our server, without the downside or possibility that on certain circumstances this strategy can cause a break in the performance or response of the server, or even not so unheard-of DoS (Denial of Service).

One little detail still is important to refer. One that can be the deciding point in putting all this theoretical ramble to good use, or to end as another good idea that aimed to high.

The crucial point in all that was stated before, is continuously calculating the point where the maximum parallel execution mode is achieved. The point were if we add more parallel load we decrease performance. The point were we should hold back new processes to start executing until some finish their job. This is the the reason for the "I" in the ISS name. The intelligence comes from determining and continuously adjusting this performance breaker point, and proceed accordingly in the management of executing processes.

ISS constantly manages a performant statistical data monitor to constantly know the average time of execution of the last X process. This data is constantly monitored by the process manager to know how to adjust the number of allowed process to run at the same time, in a multi-threaded maner.

As this data can be significantly relative to the processes we're managing, ISS aside from it's dynamic behaviour allows for custom tunning of it's key parameters that determine how it behaves.

Parameters like the following ones can be set to tune ISS to a particular type of process:

Others exist, at this time these give the right image of the possibilities ISS allows for custom tunning.

So after all this said... let's see ISS in action.

Let's lay our eyes on the following graph of performance.

You can see the sequential line being less performant that the others... at least until a certain point.

You can also see the multi-threaded line perform very well until a bottle-neck of concurrent execution is achieved around 400 processes.

And you can also see, in the blue line, the ISS performance curve. You can see it taking advantage of the multi-threaded abilities of the server along with the pure multi-threaded execution line, but not slowing down when the maximum load is achieved but rather entering a monitored managed schedule of process execution right until all process are executed. You can see that ISS can take the same power out of the server as the combined advantages of parallel and sequential execution.

The theory looks real!

You can of course question the veracity of these results. You can state that the test case ilustrated here was tailored for best ISS performance.

This is of course not true... but in a way, you have a point! The various tests we ran to validate the ISS potential gave several kinds of results. Let's talk about some of them:

ISS has of course a management toll. It adds a small footprint of time to manage the execution of a process. If the processes are very fast to execute, it will normally not be a good idea to use ISS since the management toll will be relevant and no real gain will be obtained from it's management. Probably you'll even get a loss due to the referred time toll for the ISS management.

This may be the best scenario for ISS usage. When the resources are massively used by the running processes, especially if they are finite.

When too many processes of this sort are launched at the same time, the resources run a very real danger of ending! A process may run out of resources and end execution with an fatal error. For instance when the memory has run out, a very common issue in these situations. This is the most dreaded scenario we wish to avoid, since in these cases the performance graph deteriorates in an exponential way and normally end at DoS. Such a curve was easilly generated with the Sample Application we have available on the site. Not by using the default WriterProcessFileImpl but by using the WriterProcessStringImpl one. Very easilly the JVM stalls because of very heavy RAM consumer processes until it crashes with out of memory of several dozens processes, or even many times the main thread itself!

So in these scenarios the usage of ISS can be the difference between a slow and potentially DoS server performance, and a fast tunned constant performance.

ISS was built by its creators to tackle a real-life problem in a critical live web application that works 24x7 1 months out of every year. The downtime or low performance in this application can have brutal consequences to the client so it simply was not an option. See a full description of this case study to see in real life how ISS helped in this situation.